Empowering Teams With Secure, Ethical, and Scalable Technology

At Advisor.AI, our commitment to transforming student success is matched by an unwavering dedication to data privacy, security, and responsible AI. We protect institutional and student information with industry-leading encryption, strict role-based access controls, and continuous security monitoring across every layer of our platform.

Our Security & Responsible AI Team brings together senior engineering leaders, Fortune 500 responsible AI practitioners, data-privacy legal experts, and academic advisors from both public and private institutions. This diverse expertise ensures our platform meets the highest standards in security, compliance, ethical AI development, and continuous innovation.

Security & Compliance

Protecting students and institutional trust at every step.

- FERPA & SOC 2 Compliance: Restricted access to student information, no student data used for AI training, encryption in transit and at rest, and minimal data collected—only what’s needed to support exploration.

-

No data tracking or ad targeting: Conversations are never used for advertising, profiling, or third-party tracking, protecting the integrity and trust of the student and advisor experience.

-

Data isolation and model separation: Each application operates within its own secure, isolated environment, with clear separation between AI models, data storage, and account access.

- Configurable Role-Based Access: Permissions tailored by program, cohort, or custom groups to ensure only the right people have access.

Ethical & Responsible AI

SAFE AI: Secure. Accountable. Fair. Effective.

- Human-in-the-Loop: Timely nudges help students connect with advisors for personalized support, human-centered system design

-

Comprehensive guardrails and bias mitigation: Built-in guardrails and ongoing bias testing help reduce reputational and societal risks.

-

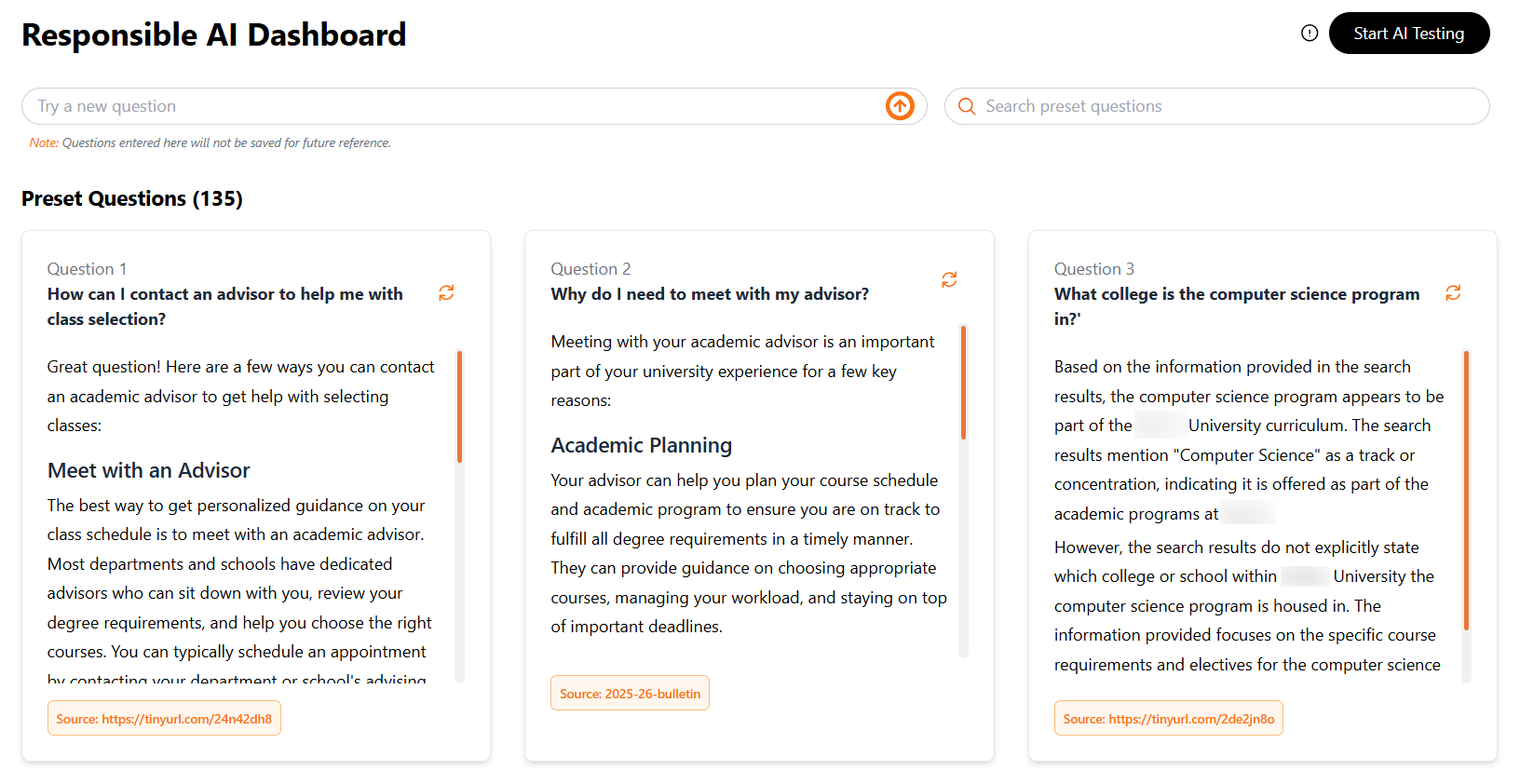

Closed-loop, verified knowledge: Responses are generated from each institution's approved resources and knowledge base—not the open internet—ensuring consistent and reliable information.

-

Clear and transparent answers: Sources are cited where applicable, helping students and advising teams trust the information they rely on.

-

Ongoing compliance and accountability: Automated AI testing, annual compliance reporting, built-in feedback mechanisms, and audit tracking support continuous oversight and responsible system governance.

High-Performing, Easy To Scale

- Cloud-Native: Built on AWS for speed, reliability, and global scale.

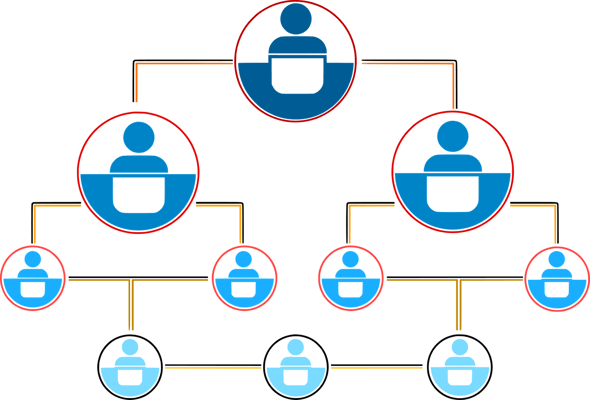

- Modular: Institutions can launch within a department or campus-wide.

- High Availability & Disaster Recovery: Redundant failover and rapid recovery keep student operations consistent and uninterrupted.

- Performance Analytics: Built-in, easy-to-use dashboards provide actionable insights on student engagement and emerging trends.

- Automatic Updates & Maintenance: Cloud-native platform delivers the latest feature updates with minimal downtime - at no additional cost.

Modular System, Easy To Launch

Once institutional needs and strategic objectives are identified, the Advisor AI team develops a collaborative action plan for launching the system and tailors it with university-specific information, such as course catalogs, degree milestones, and resource FAQs—all within a four-week timeframe. Based on the strategic value of use cases and student engagement results, additional integrations are discussed.

The onboarding process is simple: University representatives add the app link to their website while Advisor AI works with IT to establish user authentication and app enrollment. The platform's user-friendly, intuitive nature typically requires only 10 to 15 minutes of training for students and advisors, enabling institutions to transform the student experience and demonstrate institutional effectiveness from day one.

Finding the Right Balance Outside the Classroom: Navigating AI Ethics While Preserving the Human Touch in Advising and Student Support

Featured Resources

Frequently Asked Questions

Why is Advisor AI viewed as a leader in responsible AI adoption in education?

Advisor AI treats ethics as a design principle, not a feature. The platform is intentionally built to support and extend the work of human advisors—not replace them—reinforcing accountability, trust, and student-centered decision-making at scale.

What this really means: It’s like having a really smart GPS that helps you plan your route and explore options - but you’re still the one driving the car.

How does Advisor AI protect institutional and user data?

Advisor.AI uses a system architecture that separates data and model environments for training and testing. This approach is designed to restrict institutional data from being exposed to external models or unauthorized access.

What this really means: Your personal information and data stay locked in a safe, within a vault, within a separate building for each institution, and not floating around the internet.

Does Advisor AI make autonomous decisions?

No. Advisor AI neither approves nor takes action independently. All decisions remain under human control and aligned with institutional policies and governance.

What this really means: It can suggest options, but a real person always makes the final call—just like a teacher reviewing your work before it’s graded.

How does Advisor AI address bias and fairness?

The platform incorporates built-in bias and fairness guardrails. Models are trained on anonymized, representative datasets and continuously monitored to identify and mitigate risks.

What this really means: It’s designed to treat every student or administrative user fairly, not just the loudest, fastest, or most confident ones.

How does the platform avoid over-reliance on AI?

Advisor AI avoids engagement-driven or addictive design patterns. Guidance is delivered in short, purposeful steps, and students are encouraged to consult advisors for high-stakes decisions—supporting informed use rather than dependence.

What this really means: It gives helpful nudges, not constant notifications or pressure to stay glued to the screen.

Why are Advisor AI’s recommendations explainable and trustworthy?

The recommendation includes a clear rationale grounded in academic catalogs, career pathways, and/ or institutional data. This transparency supports trust, adoption, and responsible decision-making. Feedback loops help support continuous improvement and feedback cycles.

What this really means: It shows its work—so you know why it’s suggesting something, not just what it’s suggesting.